Our society’s interplanetary ambitions now face a crucial challenge: the ability to design and construct living infrastructures that enable humans to transcend earthly boundaries and settle in environments previously deemed inhospitable. Europe, heir to a centuries-old tradition of explorers and innovators, is preparing to play a leading role in this new phase of human history.

The growing concerns surrounding the use of centralised and proprietary artificial intelligence find a possible solution in the open source universe — a horizon of hope for building more transparent, ethical, and community-driven tools. But what lies behind the expression “open source artificial intelligence models”, and who are the actors responsible for regulating and governing the growth of these innovative tools?

As is now well known, the recent rise of artificial intelligence stems from the convergence of two key factors: the vast amounts of data generated by contemporary digital life—readily available online—and the ongoing advancement of computational power.

While the accumulation of data is an intuitive phenomenon, rooted in our daily experience, the evolution of computing capabilities follows more complex and less tangible dynamics. Yet these are crucial to fully understanding the nature and limitations of AI.

In today’s context, the issue of digital sovereignty plays a strategic role, directly impacting the ability of governments and institutions to manage data, infrastructure, and technology independently. According to Fausto Gernone, a competition economist specialising in digital ecosystems, Europe is currently facing a structural delay stemming from decades of neglect of issues that are now increasingly central to its technological and political self-determination

The Oxford English Dictionary has chosen “brain rot” as the word of the year for 2024. The expression— which we could translate as “brain decay”—perfectly captures that feeling of mental numbness experienced after hours of mindless scrolling on social media.

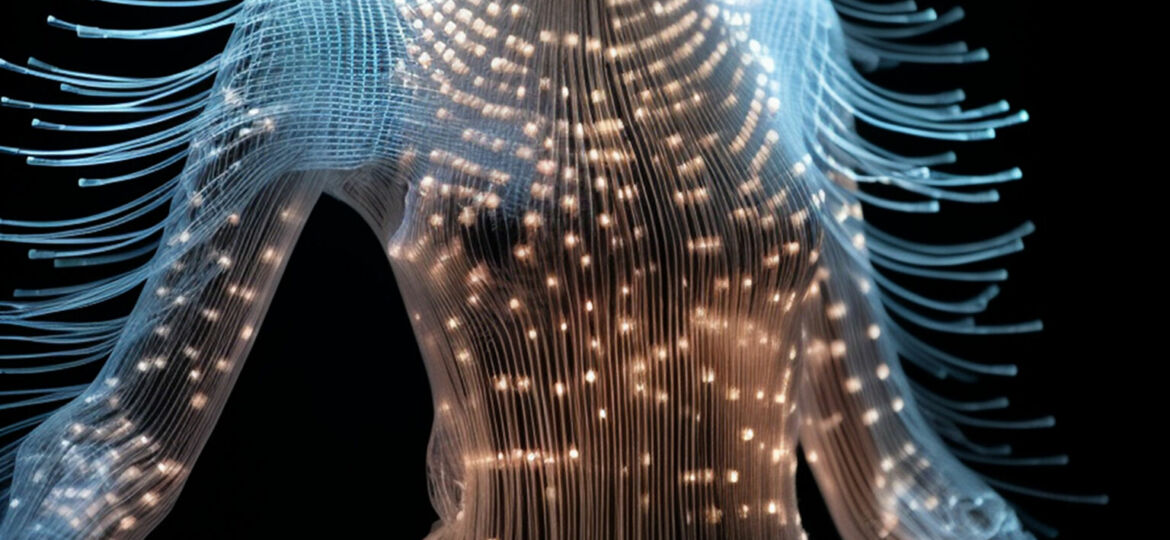

The expansion of design possibilities in architecture passes through an expansion of knowledge of reality. In this scenario, artificial intelligence, with its dual exploratory and generative nature, asserts itself as a tool capable of shaping new forms and nurturing new knowledge. But, as happens every time one enters unexplored territories, each step forward requires a firm consolidation of the newly conquered space. However invisible and intangible, the same process is occurring with AI. The creation of new scenarios imposes the need to redefine ethical frameworks, which are essential to guide future choices and establish criteria by which to measure the quality of new processes.

Although the functioning of machine learning algorithms may seem abstract and difficult to decipher, they rely on a series of structured processes that make them vulnerable to specific threats. Understanding the risks these tools are exposed to is essential for developing greater awareness in their use.

George Guida, researcher at the Harvard Laboratory for Design Technologies, is co-founder of ArchiTAG and xFigura. An expert in AI for architecture, he integrates technology and design with global and academic projects.

The original promise of the Internet, namely a free and open space for aggregation and democratic participation, has been betrayed. This is the thesis at the heart of the essay Rebooting the System. How we broke the Internet and why it is up to us to readjust it by journalist and media and technology expert Valerio Bassan, published by Chiarelettere in 2024.

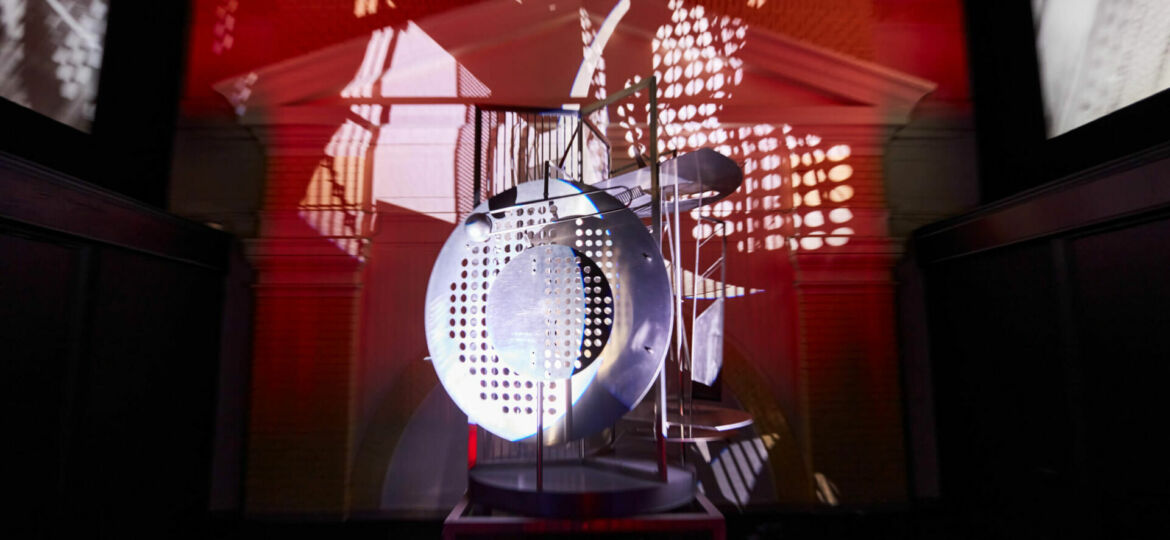

Opening the eighth edition of Fotonica, Rome’s audio, visual and digital art festival, is an installation by Hungarian artist David Szauder, inspired by none other than an iconic work by painter, photographer, designer and constructivist theorist László Moholy-Nagy, Light Prop for an Electric Stage.